Anyone recently received a weird text message with a dodgy link in it from “HMRC”, “Hermes”, “DHL” or even “Royail Mail”? Yeah, us too - it’s smishing, and it’s rampant in the UK. So, what can we do about it? Prompted by an investigation from Which?, we gave detection a go…

What is it?

If you have a phone then you’ve probably seen something like it, but you may not have seen the term smishing, a somewhat ugly portmanteau of SMS and phishing (see also: vishing for voice phishing aka malicious phone calls).

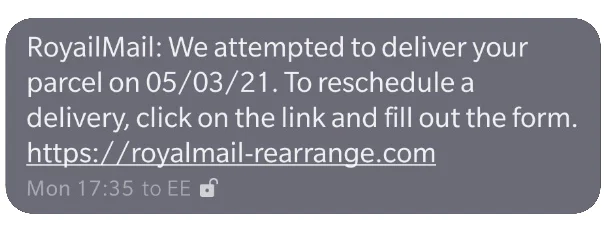

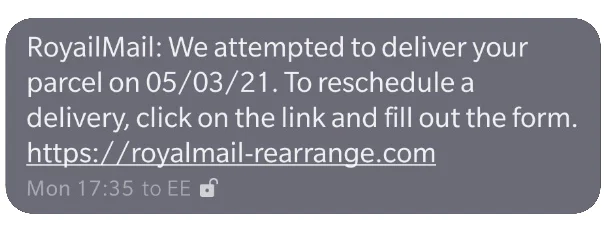

And if you spotted the typo in the introduction, you’re more focused on details than the spammers are:

Sending malicious SMS has been a spamming technique for some time now, but it does seem to have ramped up in the last few months. Which? did a survey last month and found 61% of people had received one recently. It might be anecdotal, but I get a lot more of these messages to my work EE number, which I’ve had for a few months, than I do to my personal Vodafone number I’ve had for years. Maybe this is because the EE number, which is newer, has been recycled and is already on a targeting list.

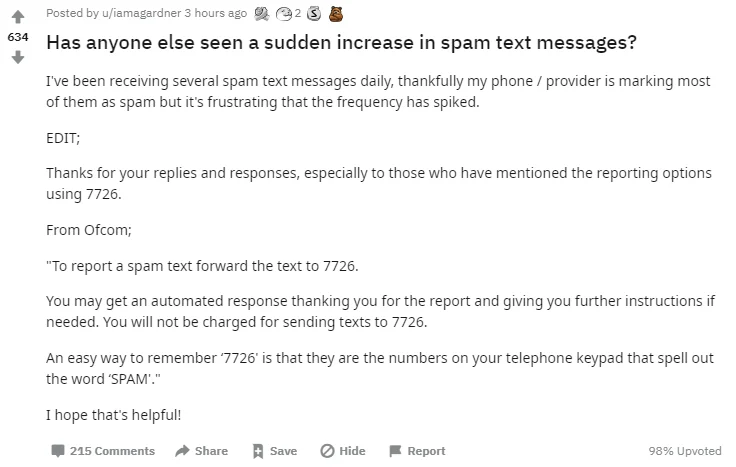

Between a few of us at work, in recent weeks we’ve received fake messages that purport to be from TSB, Royal Mail, HMRC, Hermes, HSBC, EE, Lloyds Bank, Netflix. And it’s clearly not just us, as evidenced by this recent askUK Reddit post:

If you’re technical, and can spot a malicious URL, then you might sneer at people who fall for these scams. But that really doesn’t help anyone, and we have learned from email phishing that it’s not about user education - people quite reasonably sometimes need to be protected against themselves. Because the consequences can be severe:

Drama student Emmeline Hartley moved £1,000 out of her account after a man claiming to be from Barclays called. The number appeared to be genuine, but it was a spoof. The 28-year-old’s bank has now agreed to fully reimburse her. Her story has been retweeted thousands of times and others have shared their experiences in response to her post. “I am usually very good at not falling for scams but this one caught me off-guard at a pretty vulnerable time in my life,” the Birmingham student said. “I thought I had done my due diligence but it was not enough.”

“Royal Mail scam: Fake text led to drama student being defrauded”, BBC 23/03/2021

A recent escalation has seen spammers move to pushing malicious applications on victims, as covered in this BBC story, which was serious enough that it prompted the NCSC to issue guidance.

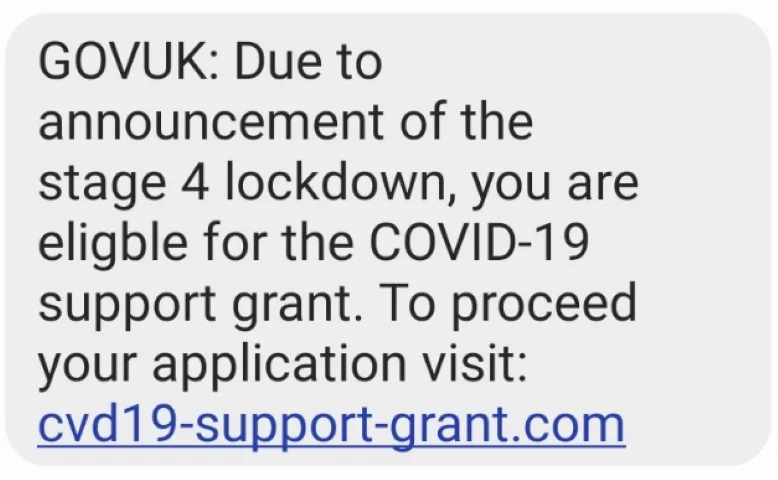

There’s also, somewhat inevitably, pandemic related spoofing:

Thankfully, one group supposedly behind a Royal Mail smishing campaign were recently arrested. But there’ll undoubtedly be more to come, as the problems with SMS aren’t going away. The primary issue is the complete lack of security in the decades-old SMS protocol itself. There is an established marketplace for selling and buying mass SMS equipment and services, as detailed in this article from Krebs on Security.

Common Format

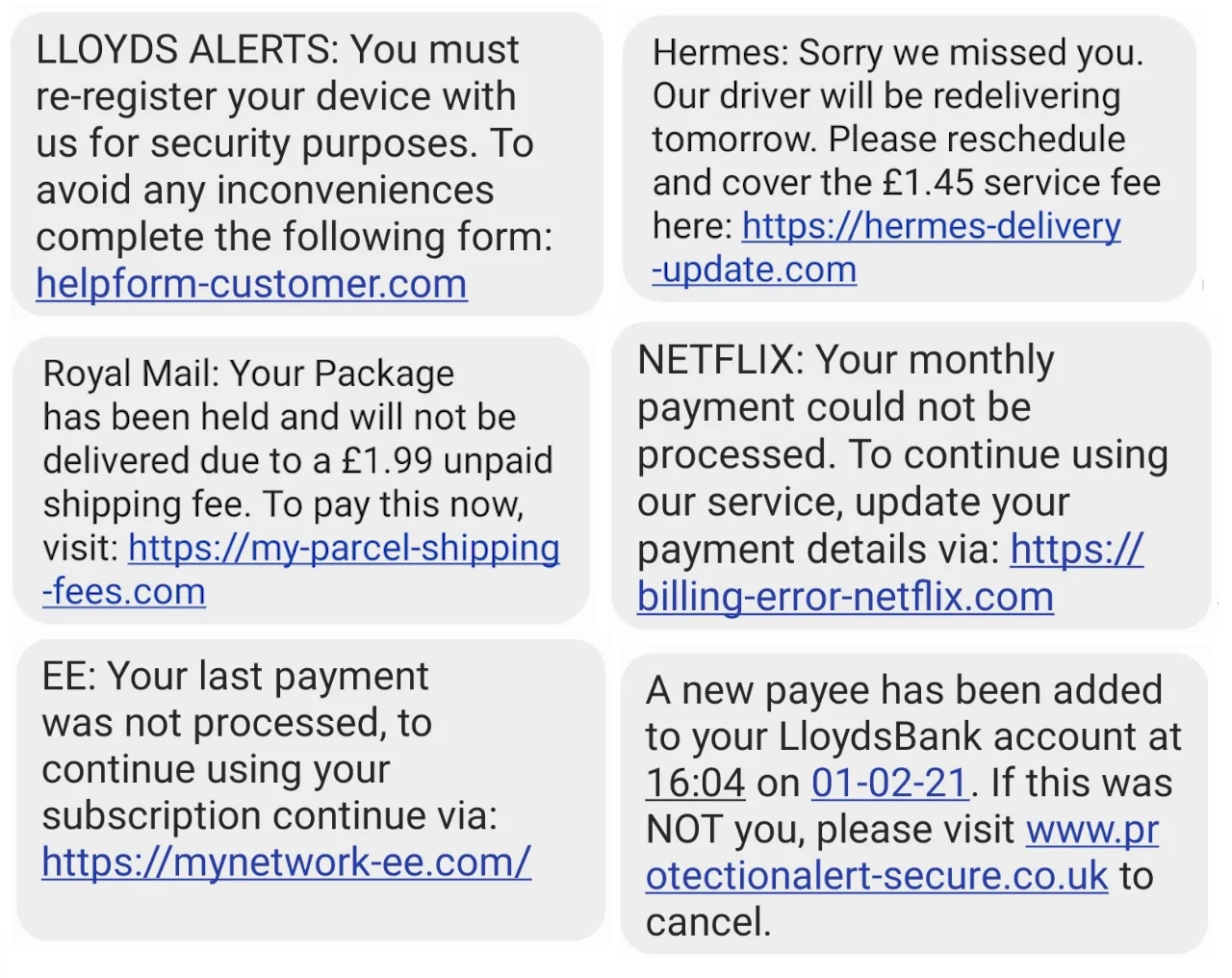

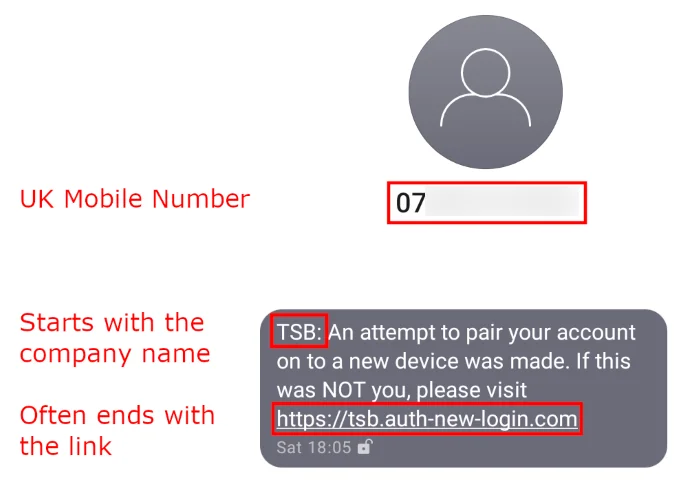

We collated a few recent messages to produce the montage below:

Viewed collectively, a repeated format is quite clear for all but the last message:

This might not mean anything, or it could be a sign they’re all from the same people, or a range of people with the same ideas. Because they can’t send registered messages (which arrive from a name, not a number), they’re instead starting messages with the name of the “company”.

Advice, and Spotting Scams

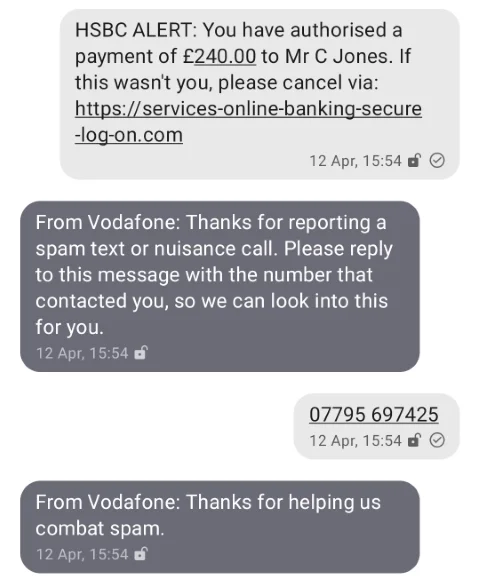

As mentioned in the Reddit post above, regardless of your network operator you can forward messages to 7726 to report them as spam.

Some companies are forward about helping users avoid scams. Royal Mail, for example, have been quite clear on Twitter in telling people they never send SMS messages related to delivery charges:

There seem to be very few legitimate messages from the mentioned companies that include links; in fact I can’t recall the last time I received a legitimate message including a URL.

If you’re wondering what sensible advice might be, and want a blanket policy to advise others: if in doubt, ignore it. A safety-first approach would be to ignore or remove all messages containing a URL that don’t come from a registered number or short code.

For further advice, see the regular authorities, including;

Detecting Smishing Messages

Google’s Android messaging application does spam detection, filing away suspicious looking senders automatically, and they have a mechanism for validating messages from registered companies with their Verified SMS programme. If you have to advise an Android user what to do about smishing messages, having them use the Google Messages app as their default SMS application is the best thing to do.

Messages offers good protection, but not everyone uses Google’s messaging app - for example, Samsung have their own app that’s the default on their devices, and some people (me) use Signal to also deal with SMS. Such fragmentation on Android makes for imperfect defensive solutions; ideally such protections could be built into Android so they’re working regardless of what SMS app people use, but even then you have the issue of phone manufacturers lagging behind Google’s releases.

On iOS, whilst their privacy policies prevent 3rd party SMS applications there is a mechanism (documented in this SDK page) that lets developers write an SMS filter application, and some apps that do that exist on the App Store, but we’ve not tested any. Within the iOS operating system there’s a setting to block messages from unknown contacts. It’s a sensible measure, but it’s worth remembering that spoofing a legitimate number or company name is not beyond the capability of some attackers.

We thought it’d be interesting to have a go at automatically detecting malicious messages on Android, so we implemented an approach based on inspecting the message sender, content and any contained links.

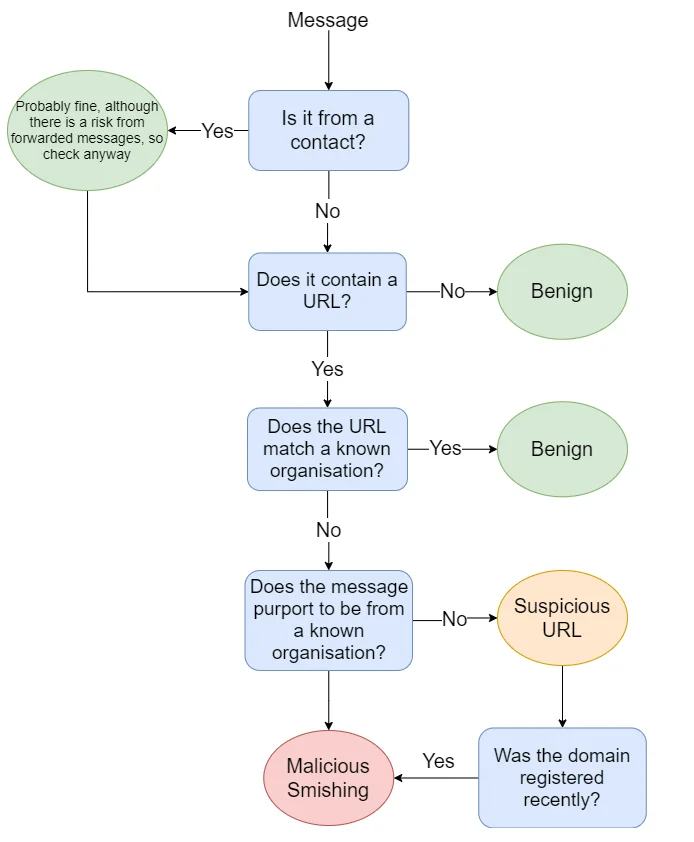

Our detection logic is something like this:

Let’s look at this process in more detail.

1. Inspecting the Sender

First off, we can look at who sent the message. A message from a contact or from a messaging code (such as a company name) is typically less suspicious than one from an unknown number, so that’s the first thing we can check.

There is an mobile industry initiative for validating the use of messaging codes, which at least makes it harder to spoof messages that come from a company name rather than a number, but it’s not foolproof (for more information on SMS Sender ID, see this blog).

2. Spotting Malicious Links

If a message doesn’t contain a link, we know it’s not smishing. Based on all the samples of legitimate and malicious messages we could collect, we built a list of UK companies who send messages, their valid domains and associated keywords, and stuck it all into a JSON file. For example, if we take the Royal Mail, there are some solid keywords and three domains:

{

"name": "Royal Mail",

"keywords": [

"Royal Mail",

"mail"

],

"domains": [

"royalmail.com",

"ryml.me",

"postoffice.co.uk"

]

},

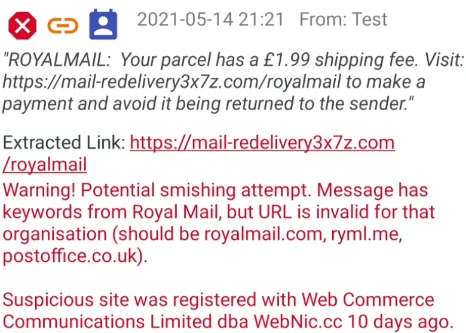

If we take the above data, and the following message, we can compare the two:

Ignoring the typo in the message (detection is never perfect), it’ll match the keyword royalmail. We can see that the URL doesn’t match any of the three we were expecting for this organisation, nor is it in our list for other organisations, so we can mark it as suspicious.

Of course, there is a danger in using the patterns from existing messages for detection, as you’re always on the back-foot reacting to the attacker. But a working technique is better than nothing.

As mentioned in a recent NCSC report (covered by the Register), a large number of malicious domains are registered with NameCheap. A colleague suggested the registrar and the time since it was registered could also be used for detecting suspicious links. Doing whois lookups of all the domains in our sample messages, we notice that:

- Many aren’t live anymore.

- All were registered with Namecheap.

- All were registered in the last 5 months, with most of them being less than a month old.

So inspecting the whois data might be particularly effective when comparing malicious domains to valid domains. For example, let’s compare a legitimate domain and a spoofed variant:

| Valid | Malicious | |

|---|---|---|

| Domain | myhermes.co.uk | gohermes-reschedule.com |

| Registrar | Key-Systems | Namecheap |

| Registered | 30/01/2007 | 10/05/2021 |

| Updated | 16/01/2021 | 12/05/2021 |

We can see there’s a clear difference between legitimate and spoof domain. There’s likely going to be a large age difference between malicious domains and the valid domains they’re trying to spoof, which can be used for detection. This technique is especially useful for links that we’re unsure about, as a link we don’t recognise that was recently registered is likely malicious.

3. Building a Proof-of-Concept: SmishSmash

We implemented the above logic into a basic Android application, which gets all the inbox messages and tests them one by one. And it works pretty well at detecting the messages we’ve been able to collect.

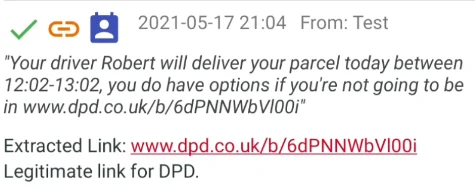

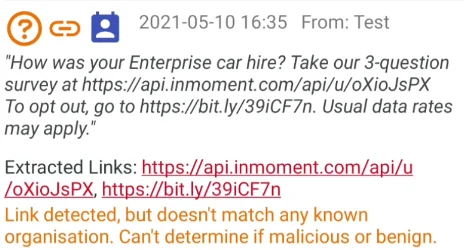

All messages with a link are marked with a link icon, and if the link matches a known domain it’s given a tick:

Messages from a known contact are also marked - all the examples here I’ve had to forward to my test phone, hence the contact icon in all the examples.

Links that don’t match a known domain but also don’t have signs of smishing are marked with a question:

The above example also shows one limitation of this approach: link shorteners such as bit.ly make links harder to inspect.

Messages with a link that doesn’t match a known domain, and contain keywords from a known organisation, are marked as malicious:

If you long-press on a message you can either forward it to someone, or report it to the spam reporting number 7726:

It copies the sender’s number to clipboard, as that’s what the reporting number asks for next:

It’s quite a simple application, but it works nicely against all the sample messages we could collate together. And it proves our point: it’s possible to accurately detect these messages.

A more featured application could have a background service that inspects messages as they arrive, and warns the user or just silently removes the messages, as the Google Messages application does. It could also allow users to submit messages for inspection, and dynamically load information on valid domains (SmishSmash loads it from a JSON resource file). If someone wants to take this on or collaborate, give us a shout.

4. Releasing a Proof-of-Concept Application

Alas, building an application was the easy part. When adding it to the Google Play Store as a beta application, publishing was blocked by the Google app checks. To publish an app with the READ_SMS permission there are two requirements that need to be met. Firstly, you need to make the app ask to be the default SMS app, which isn’t appropriate here as it’s not a fully fledged messaging app. Secondly, to qualify as an anti-smishing app we need to prove our “track record of significant protection for users”. We sent over this blog, plus references to previous blogs and collaborations with Which?, but received the following reply:

You must have a track record of significant protection for users, as reflected in analyst reports, benchmark test results, industry publications, and other credible sources of information, to be eligible for implementing this use case…your app does not qualify for use of the requested permissions for the “Anti-SMS phishing” core functionality. The information you provided was not sufficient to indicate a track record of significant protection for users.

The Google Play Team

Clearly, it’s a good thing that Google applies some rigour to inspecting applications, especially those that want access to sensitive things like SMS. And it makes sense to vet apps that purport to do something security-related. But it’s hard to see how this would allow a new developer into the security space, unless they already had, for example, desktop security products.

Instead, we’ve put the code onto GitHub, although as a security company we should be clear that most people shouldn’t be building and deploying example apps to their phones unless they trust the developer.

What next?

We’ve shown it’s technically possible to spot recent examples of malicious messages by inspecting the content and the links. Rather than doing this on an individual Android phone, it would be better if both Android and iOS did such detection. Or even better would be for the mobile operators to implement something at the network level. There may be privacy concerns about inspecting the content of all SMS messages, but it could be an opt-in. Detection and inspection of links would relatively easily allow them to spot malicious messages, which they could, for example, warn the user about with another message.

More information

- Three in five people have received a scam delivery text in the past year, Which?, 29/06/2021

- Fake delivery scam texts soar in pandemic with 60% of Britons targeted, the Guardian, 29/06/2021

- The relentless rise of Royal Mail text message scams, Wired, 21/05/2021

- City of London Police