A couple of weeks ago this Microsoft announcement about a new hardware security module came out without much fanfare, even though it could represent a big change for the security of new Windows devices. This blog provides some background on the use of dedicated hardware security modules in different platforms, and highlights their relative weaknesses and advantages.

Background

Without wanting to start with hyperbole, a lot of major platform security improvements we’ve seen in the last ten years have at their root some element of hardware security, and probably wouldn’t exist without it.

One of the earliest common examples is the use of a Hardware Security Module (HSM) to store, for example, a server’s TLS keys. What this gives us is a self-encapsulated security function, to which the server can offload cryptographic operations such as signing and encryption. This provides two benefits: offloading computationally-expensive cryptographic operations from the server frees up resources, and securely hosting the keys in hardware makes them much harder to steal.

Crucial to securely hosting the keys is that the HSM doesn’t provide a mechanism for extracting the keys used, it only gives access to functions that use the keys. We’ll not cover HSMs here in any more detail, other than to highlight this nice research from last year that detailed a new attack against a PCIe HSM, which used a custom firmware for the HSM to attack it: BlackHat slides

If HSMs are common on servers, they’re not very common anywhere else. But we do have analogous examples in all the major platforms: Windows computers using a TPM, Apple Macs and their T2 chip, iOS devices and the Secure Enclave Processor, and Android devices and ARM TrustZone.

All of these examples are distinct hardware security devices, but are quite varied in how they are implemented and integrated into their respective platforms. Let’s look at the different devices in a bit more detail.

Common Hardware Security Modules

TPMs and T2 Chips

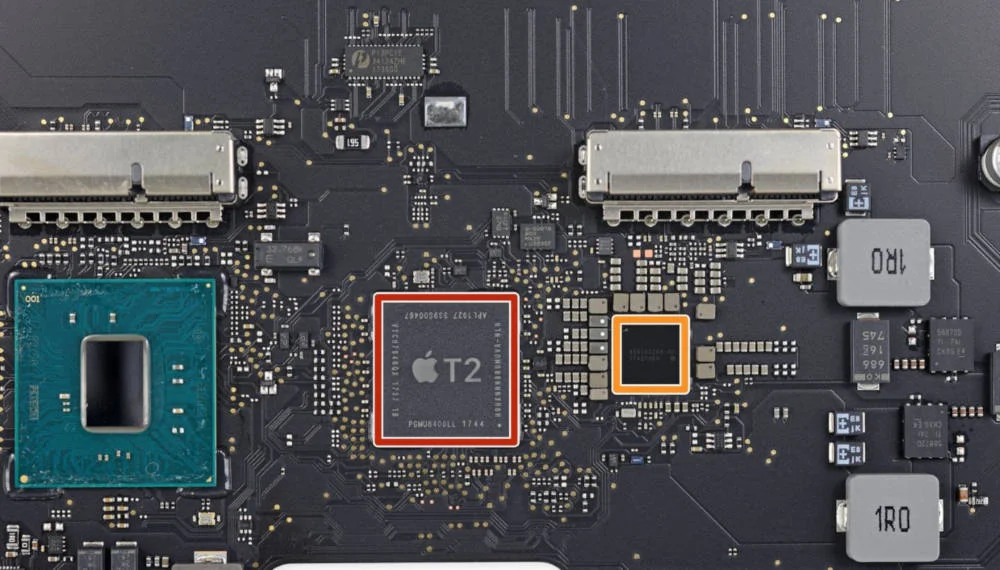

Starting with computer platforms, we have equivalent devices in the Windows and Mac worlds. On Windows machines TPMs have been around a while now, becoming more common with the release of BitLocker in 2004, and were formally standardised in 2009. As Micosoft rightly says, the TPM was “the first broadly available hardware root of trust”. Their Mac equivalent, the T2 chip, first appeared on Macs in 2018.

The TPM and T2 chips are functionally very similar, both being distinct hardware chips mounted on the motherboard of a computer. They are used to provide the lowest-level root of trust on their respective platforms, commonly for secure boot and full disk or volume encryption. The former uses the hardware module to store and verify boot parameters, and the latter uses it to store keys and perform key wrapping and unwrapping.

The fact that the security modules are physically separate devices means they have to be connected to the main processor, typically an x86 processor, by some kind of interface. This means, as in this TPM example, that they may be susceptible to attack over that interface in scenarios such as password-less unlocking.

Of course, new Macs aren’t using x86 processors, what with Apple’s move to using “Apple silicon” (aka a custom ARM processor) on the new tranche of Macs. As identified in a teardown of a new Mac, the T2 chip is now a part of the main M1 processor:

Notably absent in this sea of silicon is the infamous T2 chip. For years building up to this 2020 M1 release, Apple has been offloading numerous tasks (especially security/encryption related things) from Intel’s processors to their own custom T2 chip. Those functions have come home inside the M1, which has a Secure Enclave and a host of built-in security features…

This approach negates attacks like the one mentioned above, and brings it in line with the approach on iOS. More on which below.

iOS Secure Enclave

The iPhone 5S, which was released in 2013, contained a series of firsts for an iPhone, including being the first iPhone to feature a 64-bit processor. It was also the first to have a step-change in authentication with the introduction of TouchID. This integrated fingerprint scanner had behind it a new hardware security device, the SEP.

Functionally the SEP is much the same as the T2 and TPM chips, in that it holds secrets and performs cryptographic operations with those secrets such as verification of the user PIN and fingerprint, but crucially it is not a component that is physically discrete from the processor.

The SEP is part of the processor System-on-Chip, but runs on a dedicated portion of the silicon. It has its own core, memory and peripherals, and connects to the processor via a mailbox communication protocol implemented as a kernel extension in the main processor. See this presentation from BlackHat 2016 for more details on the SEP and how it works.

This approach has a few benefits, chiefly that making it part of the SoC silicon doesn’t expose its primary interfaces. The only sign of the SEP from outside of the SoC are the buses used to communicate with peripherals such as the fingerprint reader. This has the added benefit on a densely packed circuit board of saving footprint space for an additional component.

It is in effect a security co-processor, which is a separate part of the main processor, providing isolation from the main processor:

The key data is encrypted in the Secure Enclave system on chip (SoC), which includes a random number generator. The Secure Enclave also maintains the integrity of its cryptographic operations even if the device kernel has been compromised

There is one drawback to this approach. As the SEP sits alongside the processor it can’t monitor the main processor. To do that requires something that operates at a higher level to the regular processor, which is what we have with ARM TrustZone.

ARM TrustZone

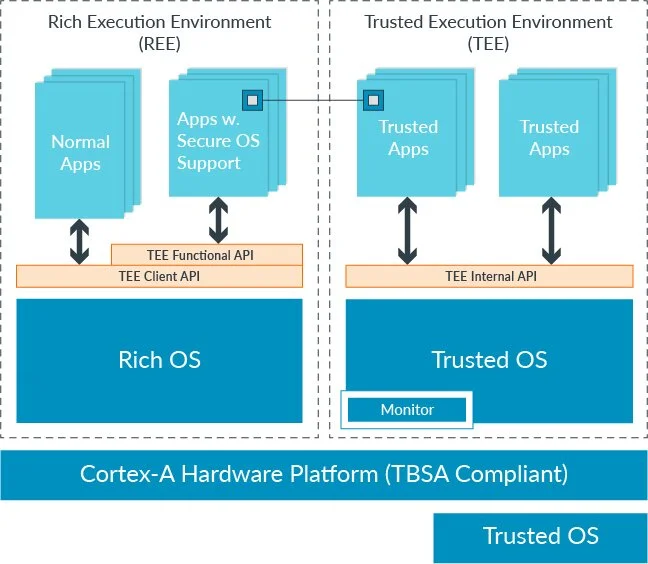

Developed along with hardware support for virtualisation, TrustZone is ARM’s standard for improved hardware support for security. It’s most commonly used on modern Android devices. Unlike previous examples, TrustZone is implemented in the processor, so it is not a discrete hardware device or a co-processor.

Introduced in version 6KZ of the ARM architecture, TrustZone provides a separate execution environment for the processor, called a Trusted Execution Environment (TEE). This is a mode into which the processor can go that is not visible to anything running in the normal mode of the processor. Cue the standard diagram from ARM:

ARM TrustZone model

Applications that run inside the TEE are called Trustlets, and typically perform the same security functions as with the previous examples. If an application in regular mode needs something that is done in a Trustlet, it fires an interrupt that moves the processor into secure mode. It can then do its special security stuff and return the output before going back to normal mode. As the regular application just sees a return from an interrupt, it’s as if nothing ever happened.

TrustZone isn’t an Android-only thing. It’s also used in iOS, on the main processor for models before the A10 chip, to implement Kernel Patch Protection (aka “WatchTower”). This runs in Secure Monitor mode, and as the name implies aims to prevent changes to kernel code once it is loaded, hence the need to sit about the kernel. KPP was replaced by KTRR in A10 chips, which drops the use of Secure Monitor mode in favour of new hardware protections in the memory manager. See more in this presentation from Jonathan Levin.

Microsoft Catching Up

All of this makes Microsoft’s announcement more interesting, as they look to emulate the security co-processor model that Apple have on iOS and Apple silicon macs:

The Pluton design removes the potential for that communication channel to be attacked by building security directly into the CPU. Windows PCs using the Pluton architecture will first emulate a TPM that works with the existing TPM specifications and APIs, which will allow customers to immediately benefit from enhanced security for Windows features that rely on TPMs like BitLocker and System Guard. Windows devices with Pluton will use the Pluton security processor to protect credentials, user identities, encryption keys, and personal data. None of this information can be removed from Pluton even if an attacker has installed malware or has complete physical possession of the PC.

The release statement lists their “silicon partners” as “AMD, Intel and Qualcomm”, so it sounds like this idea has a lot of backing. Contrary to their claim that it’s “revolutionary”, it sounds much like the integrated security modules discussed above:

Our vision for the future of Windows PCs is security at the very core, built into the CPU, where hardware and software are tightly integrated in a unified approach designed to eliminate entire vectors of attack. This revolutionary security processor design will make it significantly more difficult for attackers to hide beneath the operating system, and improve our ability to guard against physical attacks, prevent the theft of credential and encryption keys, and provide the ability to recover from software bugs.

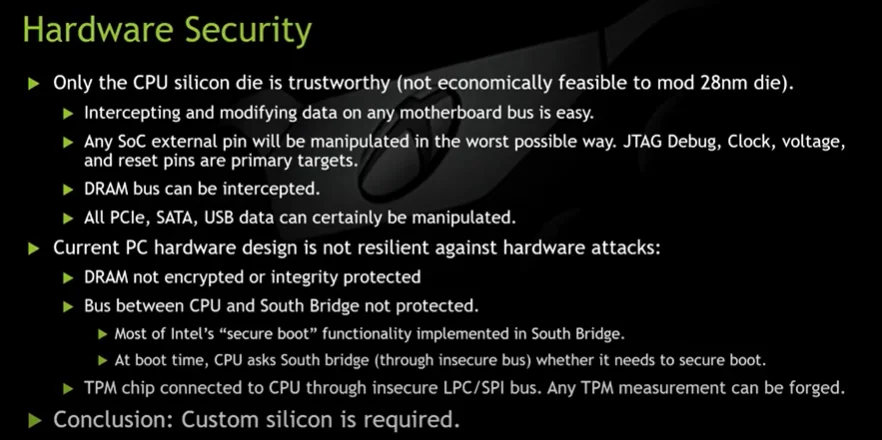

Also contrary to the idea of it being revolutionary is the fact that it’s exactly what they have in the Xbox one, which has better hardware security than most Windows computers. For more explanation on how they came to the same conclusion as Apple, see this interesting presentation from last year. An early slide lays out their logic behind the conclusion that the only answer is custom silicon:

BlueHat Seattle 2019 || Guarding Against Physical Attacks: The Xbox One Story

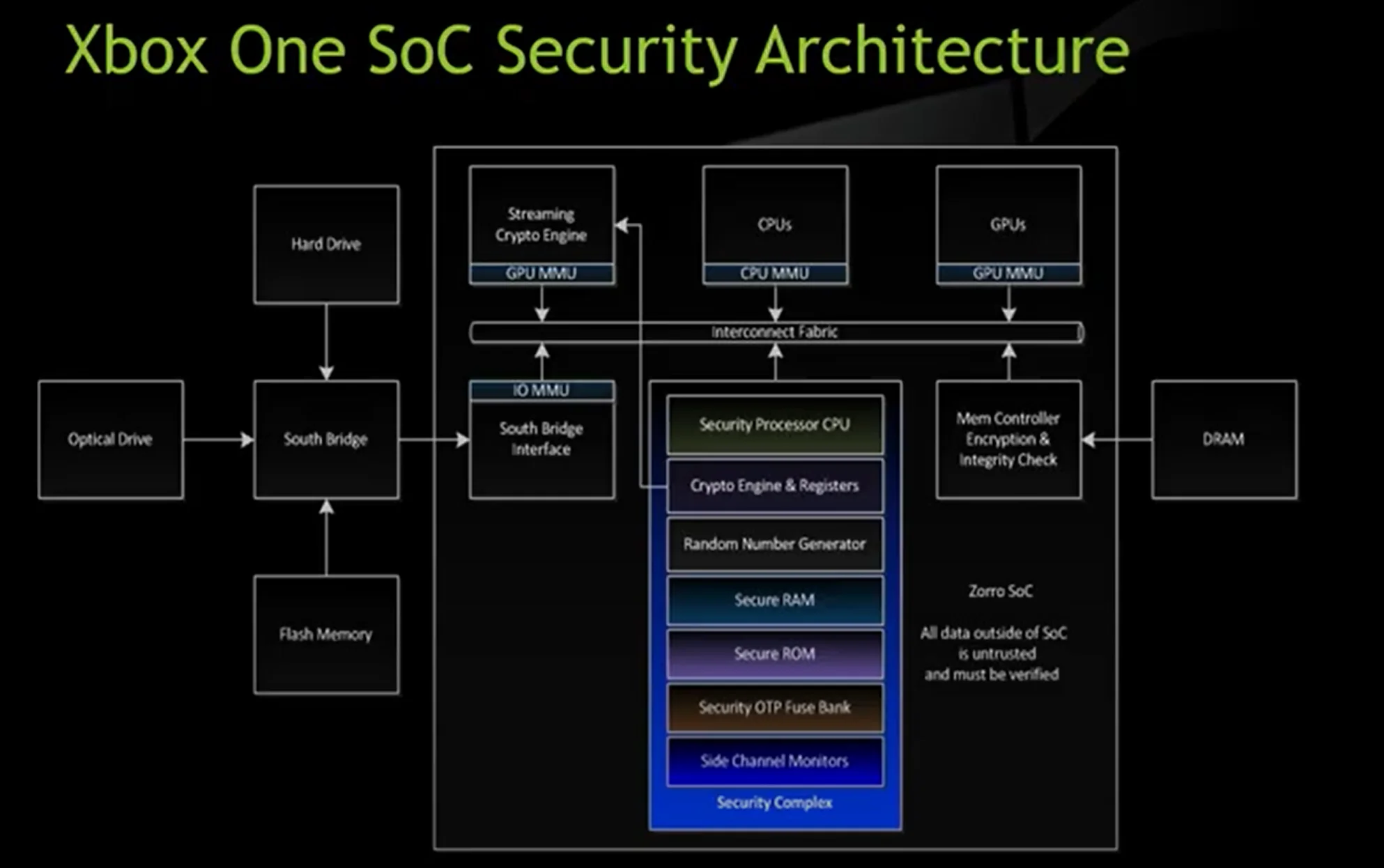

Their implementation is shown in a later diagram, where we have a “security complex” as a part of the SoC responsible for implementing core security operations:

BlueHat Seattle 2019 || Guarding Against Physical Attacks: The Xbox One Story

Summary

Hopefully that was an interesting and useful summary of the different hardware security approaches out there. Whilst their benefits are clear, the one major drawback with a hardware approach is that mistakes are rarely easy to fix. Bugs in hardware devices tend to be there until the next hardware revision, which doesn’t do anything to help people with old devices, so it can mean checkm8 for the attackers.

Microsoft’s announcement of the Pluton chip is interesting, and is a good move. But given the rate at which most people replace computers we’re not going to see them in most Windows machines for a few years. It’s a little odd, given how forward they were with the TPM, that it’s taken them this long to catch up with Apple and ARM’s approach.

There is still an interesting contrast between the approach for computers and for mobile devices. Both the TPM and T2 chip are focused more on supporting boot-time checks and disk encryption, which tend to be one-time events that happen on boot. The SEP and TrustZone approaches do that too, but also provide support for more regular security functions, such as user authentication. It’ll be interesting to see whether Microsoft use the Pluton chip to support, for example, regular login and account authentication. That would provide a step forward not only in protections for a turned off Windows computer, but also for one that’s turned on and locked.

References

Pluton Coverage

- Microsoft Is Making a Secure PC Chip—With Intel and AMD’s Help, Wired

- “Microsoft Pluton Hardware Security Coming to Our CPUs”: AMD, Intel, Qualcomm, AnandTech

Other References

- Nice analysis from Duo of the T2 chip

- Amazing research on exploiting TrustZone to enable Breaking Android Full Disk Encryption